DART: A Survey Tool for Employee Productivity

Businesses go to extreme lengths to assess and monitor employee productivity. For many organizations, the first step performing that assessment is hiring Deloitte to do it for you. One tool that Deloitte uses to perform these assessments is a gruelingly detailed survey process, where they ask middle-management users to consider each of their subordinates, and itemize the activities they spend a year's worth of time on by percent.

Our Task

The survey process is daunting and tedious no matter how you spin it. Making matters worse: it is administered entirely through email and Excel spreadsheets. This is a headache for the consultants who have to create and administer the surveys, the client managers who have to fill them out, and the project stakeholders who want to monitor progress and interpret the results. Beyond the hassle, it compromises the accuracy of surveys results and the inclination of managers to complete them. Accordingly, a window of opportunity opened: our team was hired on an investment budget to create an web app for creating and administering these surveys, and aggregating the results.

The * :

Spoiler—this one's about things not going as planned. 6 weeks into the project, our funding was pulled as the feasibility of development seemed to waiver (more on that later). Myself and another designer had poured over this project, producing countless wireframes and specs in this time, and we were beyond disappointed that we wouldn't see it come to life. Regardless, it was an awesome opportunity for me to sink my teeth into a complex user interaction and design an experience that is differentiated from the dashboard work our team ofter produces—not to mention the invaluable (though painful) learning experiences that arise from failure.

Project Personas

The nature of the survey involves handoffs between many users and stakeholders along the way. Accordingly, we needed to consider the ways that different types of users would interact with the tool. A summary of those main users is below:

- Probably a Deloitte consultant

- Savvy, organized, comfortable with complexity

- Relatively junior in rank; will probably need a reviewer to oversee work before it is sent out

- Deloitte consultant or manager

- Maybe the same as Creator but not necessarily

- Will need frequent communication with knowledgable client-side folks to accurately administer survey

- Client-side middle-manager in functions like HR and finance

- Probably resentful and impatient about the survey process

- May be a below-average tech-user; usability very important

- Senior-level Deloitte or client-side project stakeholder

- Vested interest in maximum compliance among survey respondents

- May play an offline role in motivating or driving compliance

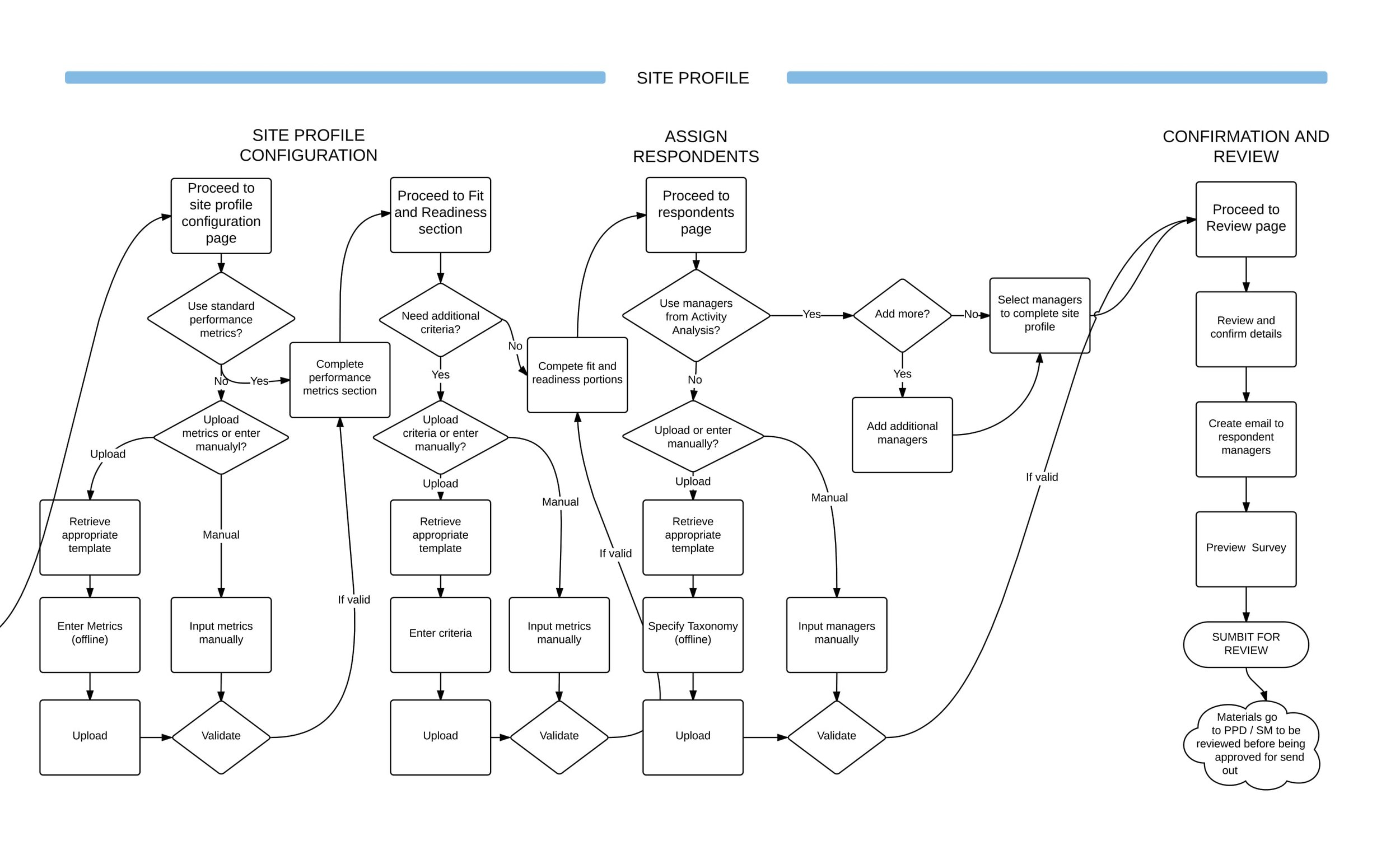

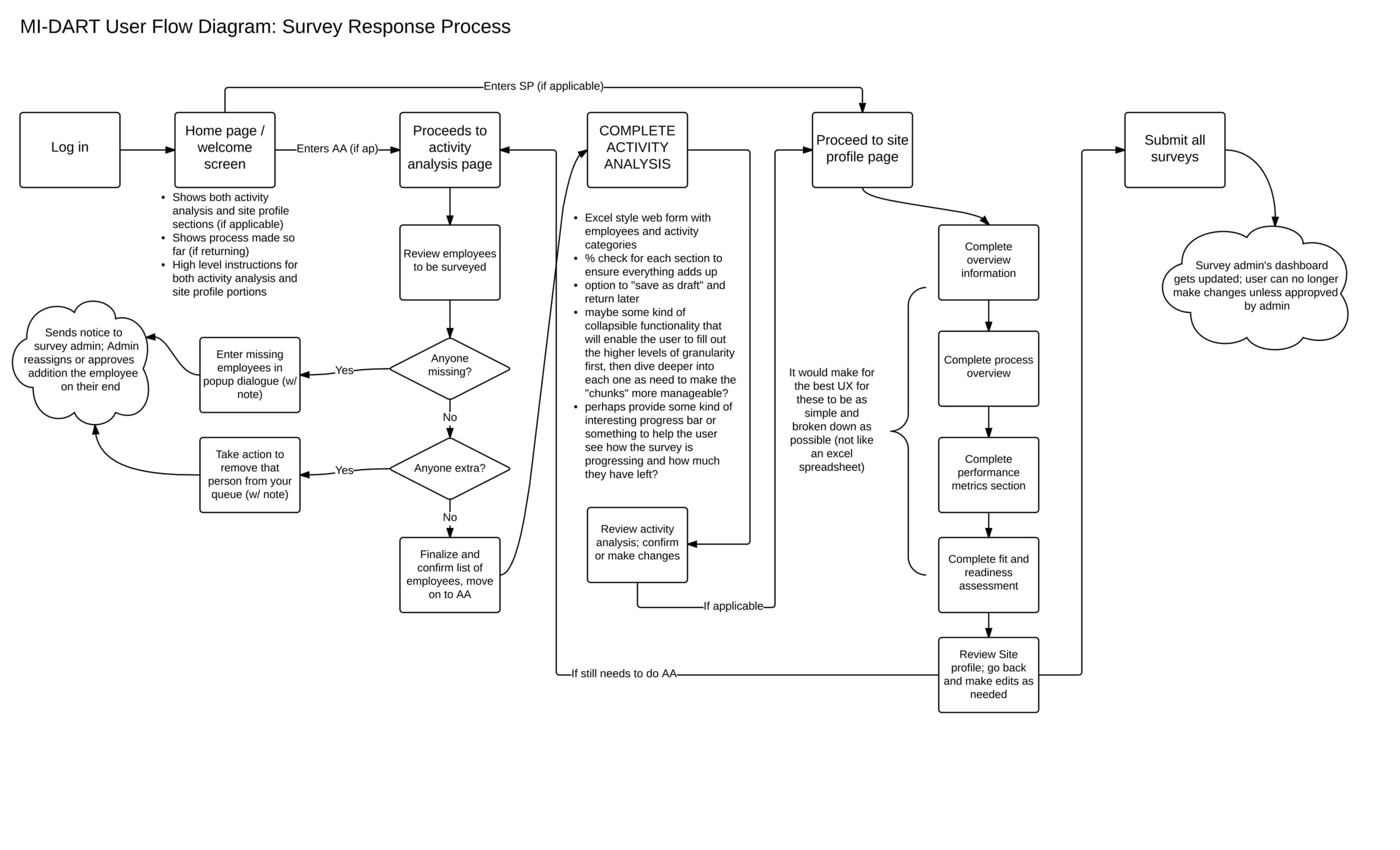

User Flows

One exercise I led to get the ball rolling was the development of Flows for the tasks that took a linear path: the Survey Creation and Survey Respondent processes. This helped my partner and I get immersed in the users' worlds, and wrap our heads around all the funny corner cases they mind walk themselves into.

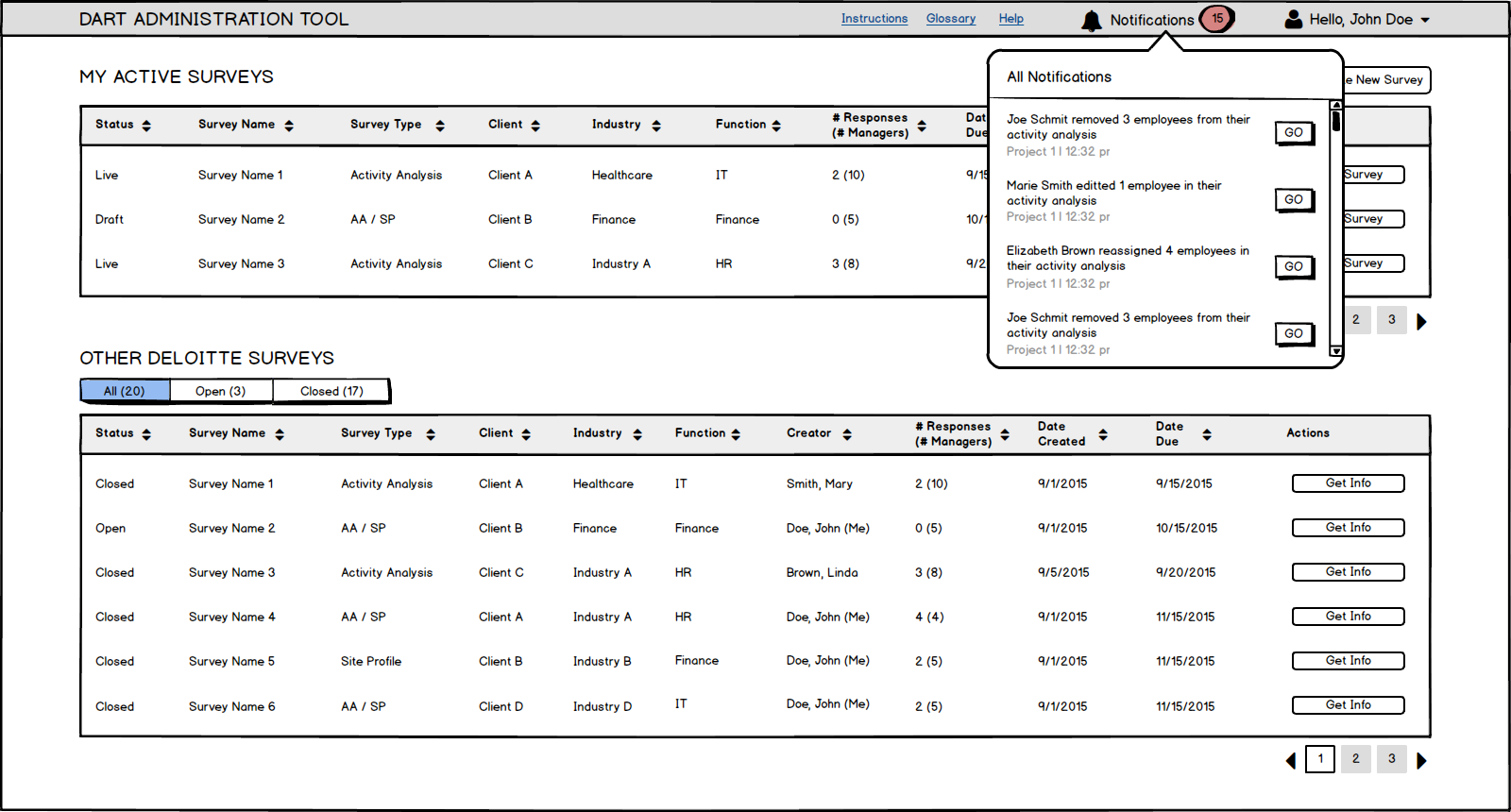

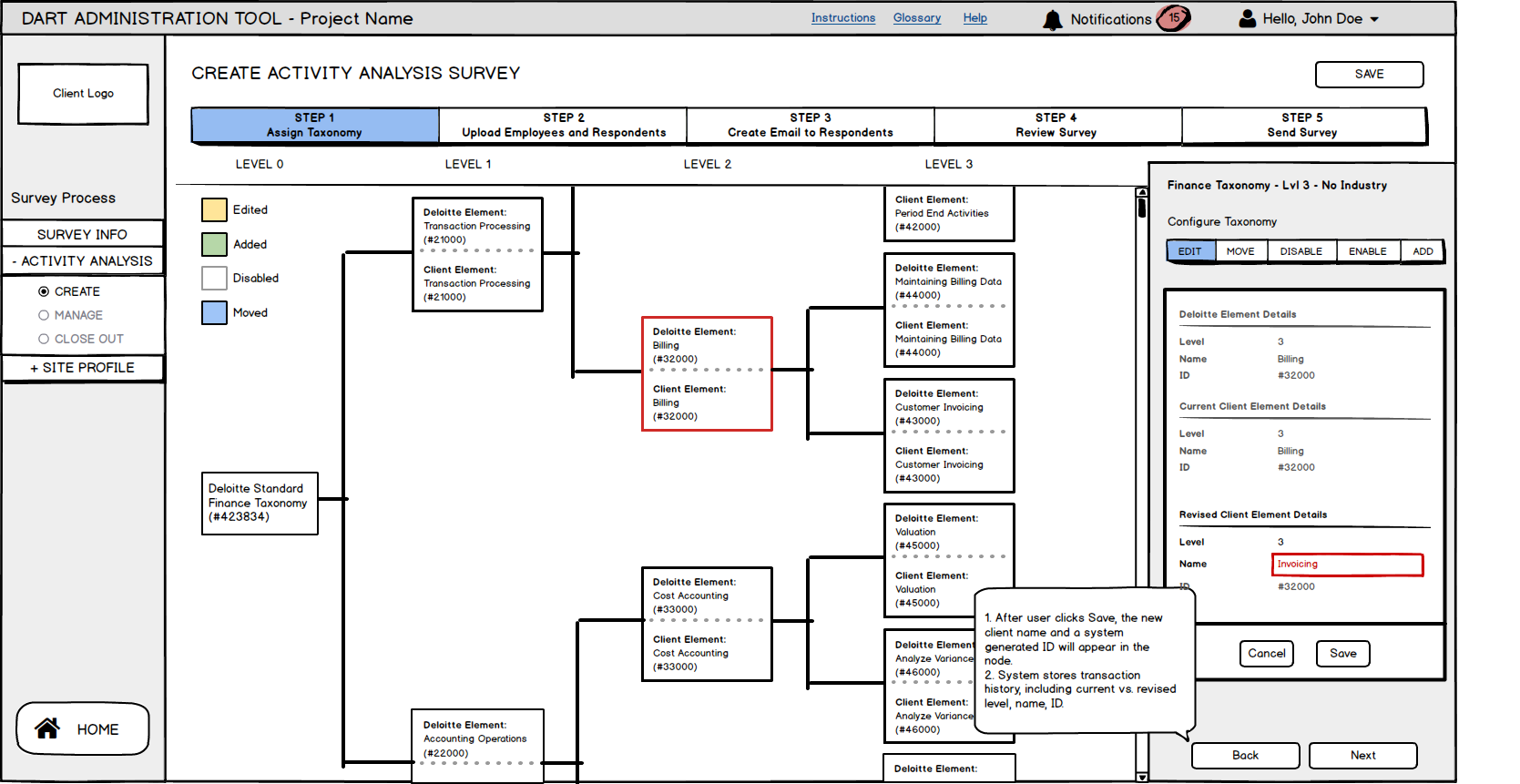

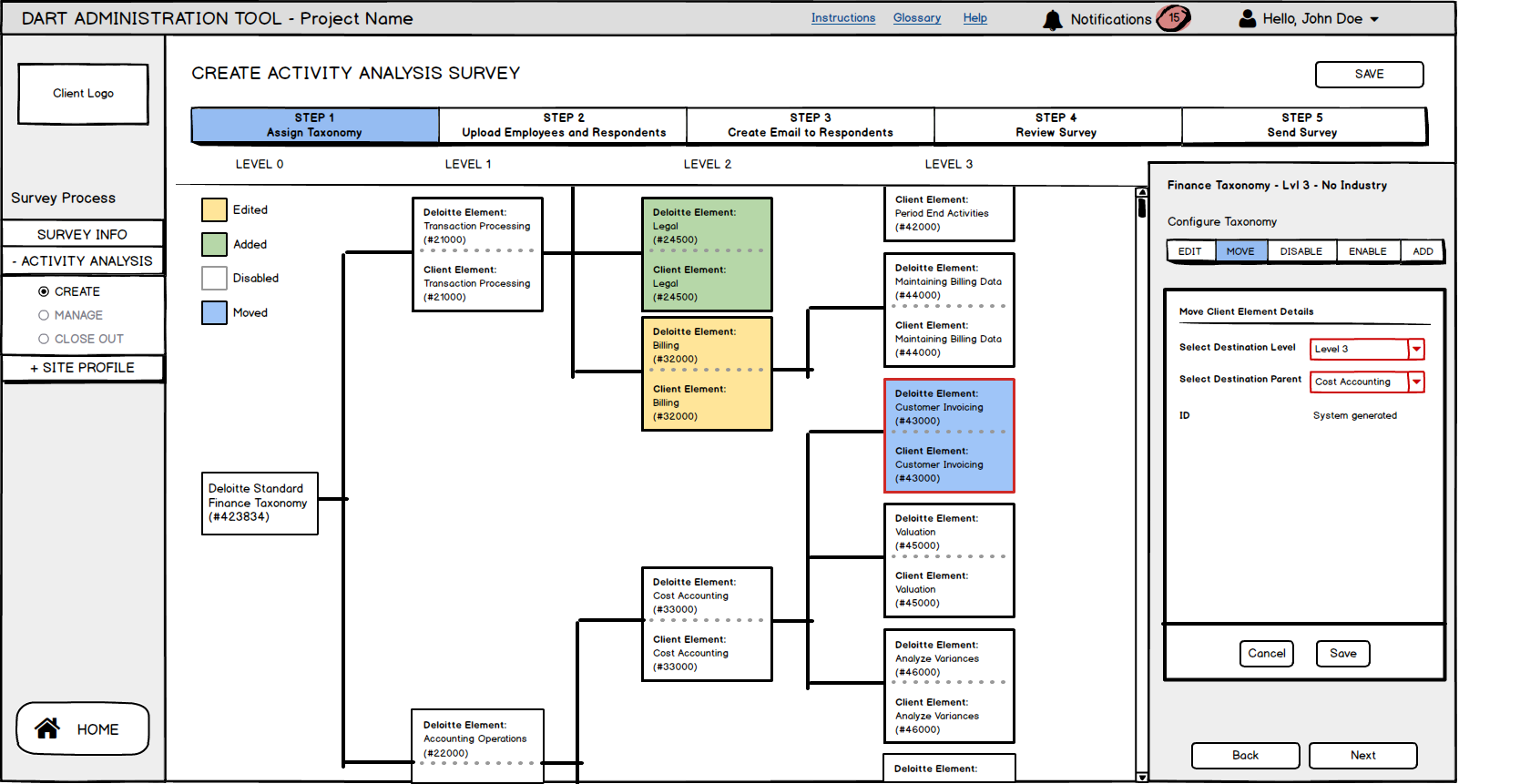

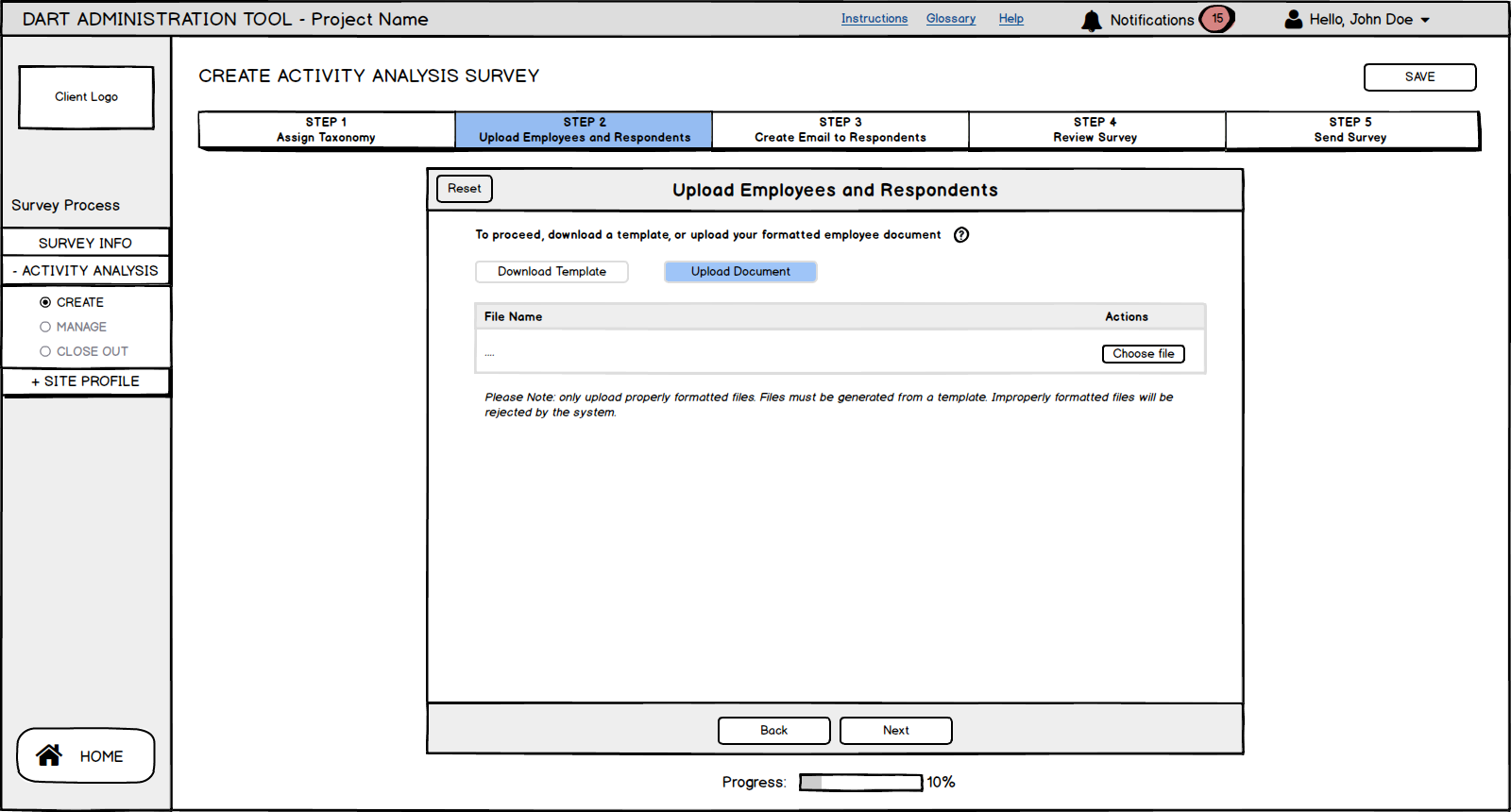

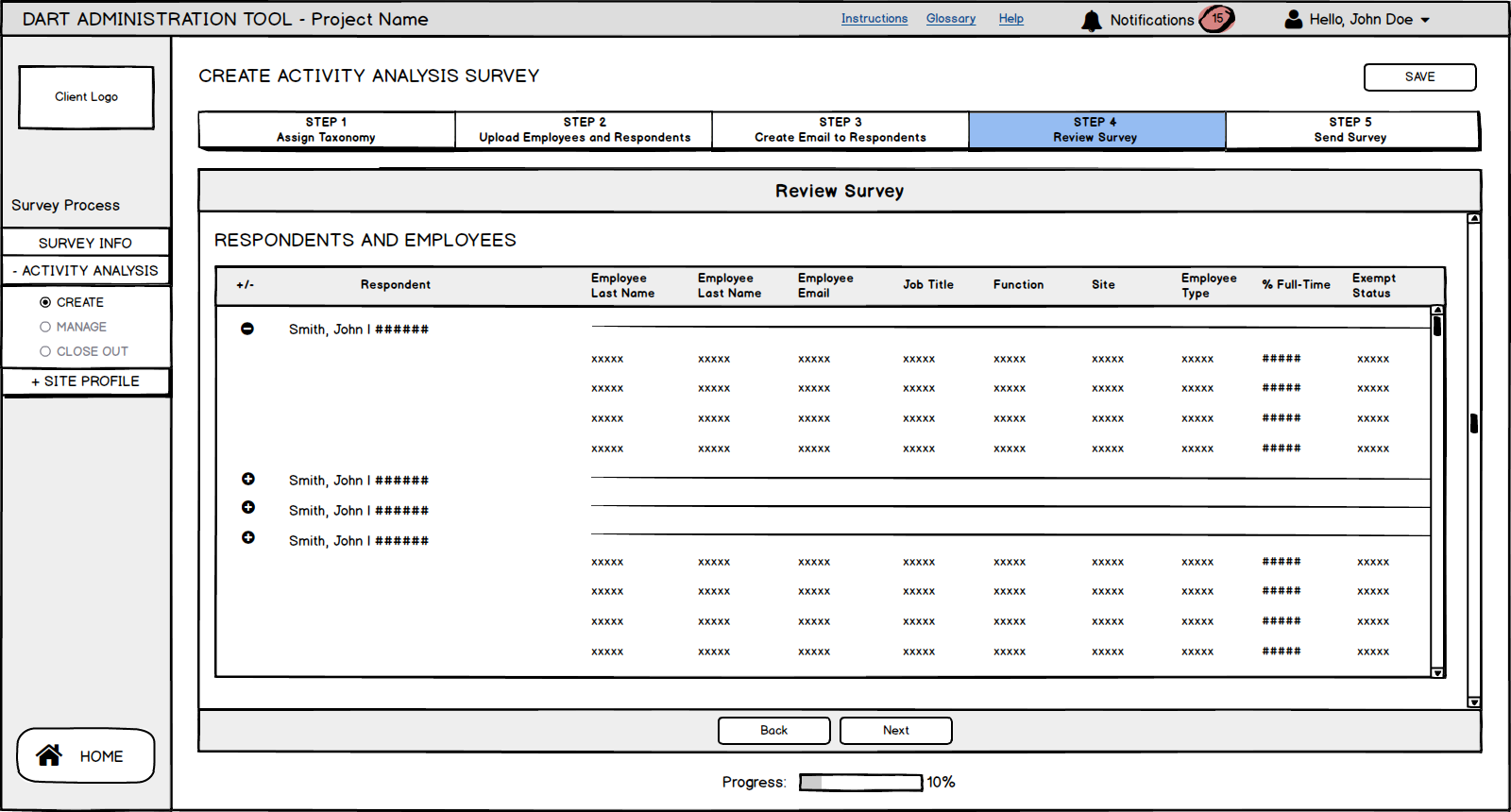

Low-Fidelity Wireframes

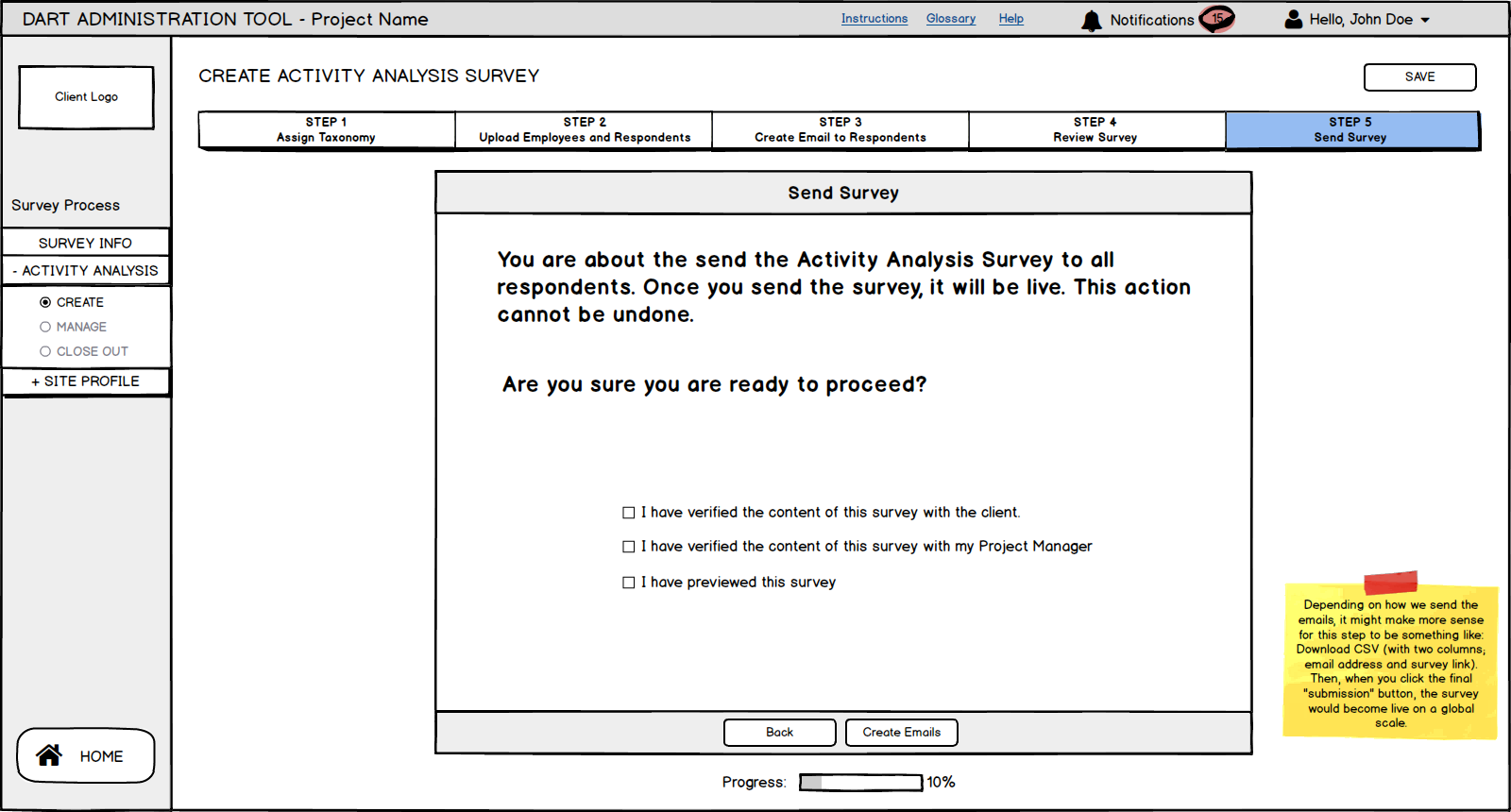

Once we had vetted the various user flows and UX constructs, we began producing a lot of wireframes. We divvied up the work, and I took on the Survey Creation and Survey Administration processes. A sample of these Balsamiq wireframes is provided in line below, and you can see a more comprehesive demonstration in the following Invision links:

The End?

As I mentioned above, this project came to an abrupt and unfortunate halt when our funding was pulled about 6 weeks in. This really caught me off guard, and I was upset and disappointed that the hard work I had done would never see the light of day. Furthermore, was discouraging knowing that these survey teams, in lieu of a solution, would continue to use a poor system for conducting these surveys. But along with every disappointing outcome comes a silver lining: the lessons learned. Here's a summary of what I think went wrong with the project and how I would do it differently today

Issue 1: Lack of dedicated project planning phase.

When the project it kicked off, DART was slated to be the largest application our team had ever set out to build. Our bread and butter was short-term, 6-8 week dashboarding projects, and we didn't understand the level of planning required to pull off an application like this. Accordingly, after kick-off, the design team basically hit the ground running to produce wireframes. In hindsight, this was a very reductive approach to such a large and complex project—it underestimated the value of developer and SME input during the design phase, and therefore encouraged the design team to make an entire platform based on assumptions. The result was confusion and lack of team alignment. We could have saved ourselves a lot of headaches if we had taken 2 weeks or so to dedicate to project planning before diving in.

Issue 2: Design was overly siloed from development.

This project used a waterfall development cycle that created many misunderstandings between design and development. The way that people-resources were allocated to the project was largely to blame. During the 6 weeks the project lived, the allocated developers were not really dedicated to this project; they were still booked full-time on other client work. Accordingly, they didn't have the bandwidth to weigh in on design concepts, think about the tech infrastructure, or help scope out a phased delivery.

Issue 3: Designing excessively large chunks of the application between check-ins.

When my partner and I began designing wireframes, we were production fiends. We would hole up in a conference room, work through our ideas, sketch, and produce crazy volumes of assets. It was incredibly productive—or so it felt. The piece we were missing was adequate feedback. By the time we really reviewed our progress, the amount we had to present was overwhelming, and we had gathered so much knowledge about the design problem that the rest of our team couldn't keep up with our thought process. The result was a massive set of wireframes that the internal project team didn't fully understand.

Today I would...

If I could do this project over again as my older, wiser self, there's one big thing I would do differently in hopes of avoiding the above issues: advocate tirelessly for a more agile approach. Because our team was used to shorter, more simple projects, we were not well-versed in the best methods for complex application development. Some of the benefits of this approach would be:

- A dedicated planning period. A "Sprint 0" for project planning would give the entire team the time and brain power to gather requirements from the business side, draft a site-map, create a UX construct, and think through the tech stack. This would create a giant project blueprint, which would facilitate subsequent sprint planning, help us set expectations with the business, and enable us to scope out a phased delivery approach starting with MVP.

- A manageable, segmented workload. Using a 2-week sprint structure, we could segment out manageable, prioritized chunks of design and development tasks, which would assuage the hectic, overwhelming feeling of tackling a big project

- A system of feedback and accountability. Sprint planning and review meetings on the bookends of the sprint would facilitate ownership and accountability. Daily stand-ups and twice weekly reviews would keep everyone aware of progress, and give contributors the opportunity to voice concerns, offer assistance, or announce any blockers.